China leads in emotion recognition tech, reinforces privacy rules to tackle abuse

Reinforcing privacy to tackle abuse needed as emotion recognition tech races ahead

Tired and depressed after a long day's work, a man sits down and asks his smart stereo to play a song. The stereo immediately recognizes his emotional state and plays him a cheerful tune. In the beautiful melody, the man is slowly rejuvenated, and his mood gradually improves.

Conversely, at a busy highway checkpoint, artificial intelligence (AI) pre-warning systems silently observe drivers and passengers in vehicles passing through. Seconds later, security officers stop a car with passengers looking strangely nervous and discover drugs in the car.

These scenes reminiscent of science fiction are on the increase in quotidian life. In China, emotion recognition technology is fast developing in this data and AI era boom, and has been widely used in various fields including health, anti-terrorism, and urban security, industry insiders told the Global Times.

"Currently, the AI emotion recognition tech is still led by the US, but its practical uses are already blossoming in China," said Wei Qingchen, head of EmoKit Tech Co., Ltd. that specializes in developing products based on its emotion recognition engine Emokit.

Neuromanagement expert Ma Qingguo believes that China's application of this tech is also unrivaled globally, and "perhaps more advanced than the US" in non-military fields thanks to efforts by many domestic tech start-ups and related research institutes.

"Emotion recognition is definitely the direction of humanity's future tech development," Ma, head of the Academy of Neuroeconomics and Neuromanagement at Ningbo University, told the Global Times.

High-precision recognition

Generally, emotion recognition technology in China has reached high precision in its uses. Products based on AI emotion recognition can achieve an average comprehensive accuracy of 70 to 95 percent, according to Wei.

Wei revealed that the Emokit system developed by his company can "accurately combine signals from video, audio and body sensors and perform multi-frequency overlay analysis."

In a clinical project in partnership with a renowned psychiatric hospital in Beijing, for instance, Emokit has achieved a combined computerized accuracy of 78.8 percent for the identification of schizophrenia, approaching the standard of clinical testing performance, Wei said. The system's accuracy in diagnosing depression by merely listening to a patient's voice is nearly 70 percent, he added.

Earlier in 2018, tech firm Alpha Eye surprised the public by showing the pinpoint accuracy of its emotion recognition technology. In a TV program by China Central Television Station that year, the Alpha Eye product successfully distinguished the only real sniper from eight soldiers through observing their expressions, faster than a renowned psychologist did in the same program.

It sounds inconceivable to many that human emotions can be (accurately) recognized by some machines and programs that have no innate emotional intelligence. Ma explained that there are two main methods of emotion recognition at present, a non-contact one - by observing human facial expressions and behaviors; and a contact one - by a subject wearing a device on the head and having the brainwaves be monitored.

"The former one is more practical, and the latter one is more precise," Ma noted.

Either method is superior to manual recognition, which inevitably involves people's subjective judgments, said Shi Yuanqing, CEO of Cmcross, a Shenzhen-based emotion recognition and judgment tech development company.

"The current AI technology is able to capture physiological indicators that people cannot control, such as their subtle muscle tremors, heart rates, and blood pressure changes," Shi told the Global Times. "That makes the recognition process more accurate and objective."

Wide use

With relatively high accuracy, emotion recognition technology has been used in various walks of life from detecting possible mental illness to interrogating criminal suspects, said domestic developers.

"Most overseas research teams are still focused on recognizing emotional outcomes such as happiness and sadness, but Chinese companies are breaking through the application fields of this technology by further analyzing the motivation behind people's emotions," Wei remarked.

In terms of road safety, the technology is expected to give early warnings before drivers have abnormal behaviors by monitoring their physical and psychological indicators, Shi told the Global Times.

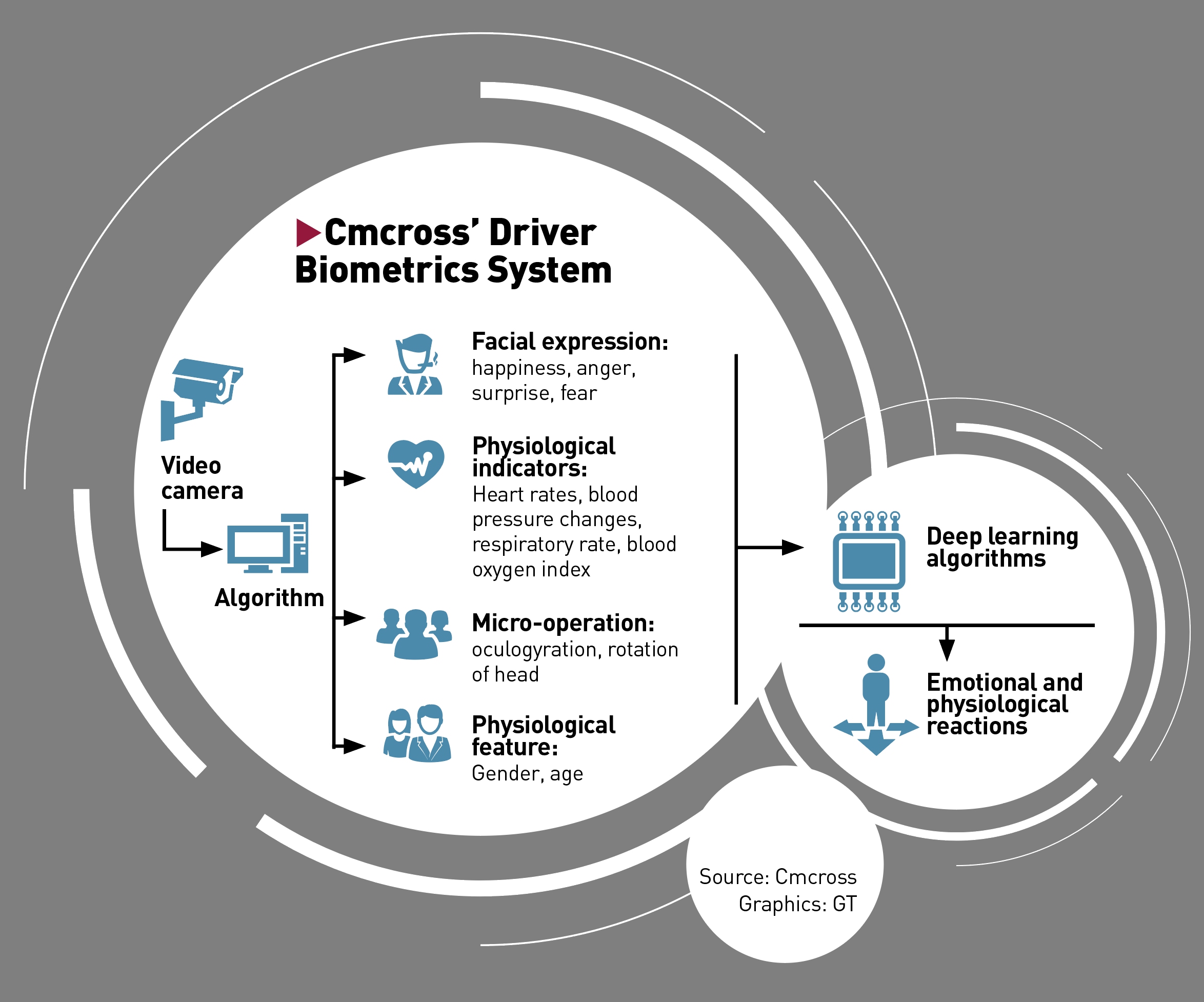

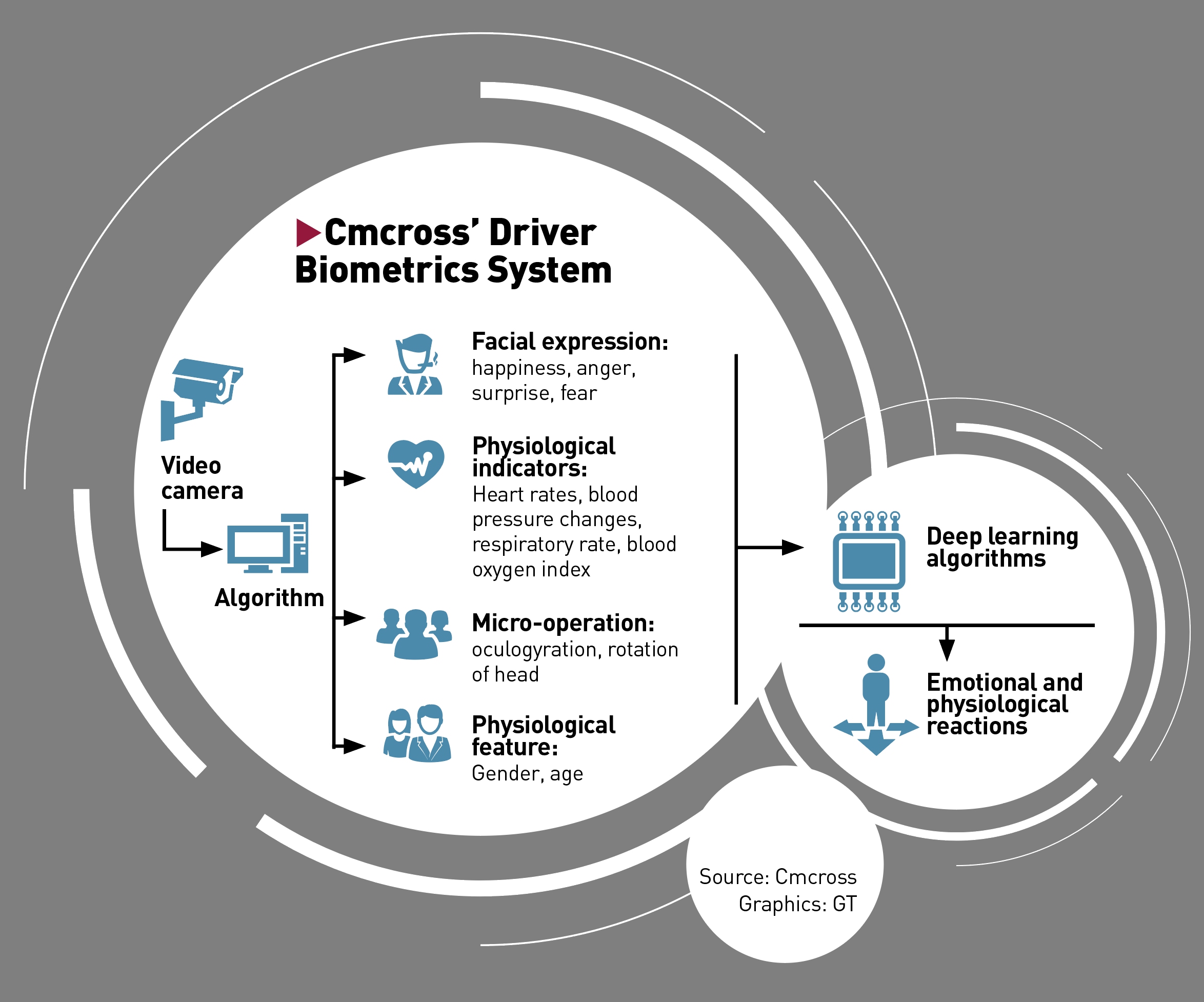

Shi's company developed a Driver Biometrics System, which captures a driver's expressions, micro-movements and physiological characteristics through a camera mounted on the dashboard in front of the driver, and then analyzes his or her emotional and physiological reactions, such as drowsiness and psychological tension levels, through deep learning algorithms.

Shi mentioned an incident in which a bus driver in Southwest China's Guizhou Province intentionally crashed the bus into a local reservoir in July 2020, which killed 21. "More tragedies like this will be prevented from happening if we put emotion recognition tech into monitoring drivers' real-time status," he said.

Ma noted the important role this tech can play in maintaining social stability and protecting people's lives.

He referred to a shooting case that happened in the American city of Lakewood in 2009. In this case, ex-prisoner Maurice Clemmons fatally shot four police officers at a local coffee shop only one week after he was released from jail.

"It might not have happened if the jail had done a risk assessment on Clemmons, and postponed to release him after finding that he might have posed a threat to others' safety," Ma sighed.

Back in China, emotion recognition has contributed to the risk assessment of prisoners in a few regional prisons. In 2019, the criminal psychology research center under China University of Political Science and Law worked with a prison in Guangzhou, South China's Guangdong Province, in putting the "non-contact emotion recognition" tech into use in prison settings for the first time, Guangzhou Daily reported in November that year.

The technology helps prison officers to evaluate whether a prisoner presents potential risks, including possible mental problems and violent or suicidal tendencies, and estimate whether he or she is likely to repeat an offense after release, said Ma Ai, director of the center.

After a prisoner looks at the camera for three to four seconds, this recognition system can know his or her seven main physiological indexes including body temperature, eye movement, and heart rate, and convert them into psychological signs showing whether the prisoner is calm, depressed, angry or whatever else at that time, Ma Ai noted.

This technology is "the most advanced in the world," Ma Ai told the Global Times. At present, there are five to six prisons in China where this technology is in use, he added.

In the Western world, emotion recognition is mainly used in medical care and public security, and researchers from developed countries including the US, Germany, and Japan are also working on its tech development. This fast-growing technology had created a $20 billion-dollar industry globally, said The Guardian in its March 2019 coverage.

Privacy concern

The mushrooming emotion recognition industry has also sparked controversy around the world on privacy protection. Some people are uncomfortable with their emotions being captured and "read" by AI technology, worrying about possible abuse and the leakage of personal information.

In India, a plan to monitor local women's expressions with facial recognition technology to prevent street harassment led to public fear for potential privacy violations, Reuters reported in January.

Similar to the facial recognition information, the leakage of emotion information may trigger a series of negative effects that threaten people's personal and property safety, said Huang Rihan, executive dean of the Beijing-based think tank Digital Economy. "Corresponding laws, therefore, must keep pace with the development of new technologies," he noted.

China's legal system, including its first-ever Civil Code and the Personal Information Protection Law of China (Draft), tries to improve citizens' privacy through regulating the collection and disclosure of personal information, Huang said.

"Authorities should also strengthen supervision and let the management run ahead of the technology development," he told the Global Times.

In terms of customer privacy protection, Wei pointed out that all the services provided by EmoKit Tech are based on the authorization of the clientele, ensuring that users are informed.

"All our partners are legitimate companies and organizations and the applications we jointly developed are legally compliant and in line with internationally accepted technical ethics," Wei said.

He added that the data sources are automatically identified through computerized AI algorithms, and company staffers do not have access to the raw data in most cases.

In recent years, China's fast-developing AI technology has often been smeared by some Western institutions and media, which politicize the technology to attack China, observers found.

The latest case of slander involved a report by the UK-based organization Article 19 published in January, which baselessly claimed that the emotion recognition tech in China has a "detrimental impact on human rights," despite this technology having been widely developed and used in many countries globally

Chinese industry insiders believe there is no need to demonize the emotion recognition technology. "The right to life is a fundamental human right," said Ma Qingguo. "In some cases, emotion recognition can figure out dangerous or violent persons and help prevent their wrongdoings, which helps save people's lives."

Emotion computing is not an evil "mind-reading" technique, Wei said. Whether it is used in financial anti-fraud, in judicial interrogation, or the more general fields of intelligent education and healthcare, "we Chinese companies strictly follow the principle of 'technology for good,' with the aim of better serving people and not letting lawbreakers get away with it," he told the Global Times.

Conversely, at a busy highway checkpoint, artificial intelligence (AI) pre-warning systems silently observe drivers and passengers in vehicles passing through. Seconds later, security officers stop a car with passengers looking strangely nervous and discover drugs in the car.

These scenes reminiscent of science fiction are on the increase in quotidian life. In China, emotion recognition technology is fast developing in this data and AI era boom, and has been widely used in various fields including health, anti-terrorism, and urban security, industry insiders told the Global Times.

"Currently, the AI emotion recognition tech is still led by the US, but its practical uses are already blossoming in China," said Wei Qingchen, head of EmoKit Tech Co., Ltd. that specializes in developing products based on its emotion recognition engine Emokit.

Neuromanagement expert Ma Qingguo believes that China's application of this tech is also unrivaled globally, and "perhaps more advanced than the US" in non-military fields thanks to efforts by many domestic tech start-ups and related research institutes.

"Emotion recognition is definitely the direction of humanity's future tech development," Ma, head of the Academy of Neuroeconomics and Neuromanagement at Ningbo University, told the Global Times.

photo: Li Hao/GT

High-precision recognition

Generally, emotion recognition technology in China has reached high precision in its uses. Products based on AI emotion recognition can achieve an average comprehensive accuracy of 70 to 95 percent, according to Wei.

Wei revealed that the Emokit system developed by his company can "accurately combine signals from video, audio and body sensors and perform multi-frequency overlay analysis."

In a clinical project in partnership with a renowned psychiatric hospital in Beijing, for instance, Emokit has achieved a combined computerized accuracy of 78.8 percent for the identification of schizophrenia, approaching the standard of clinical testing performance, Wei said. The system's accuracy in diagnosing depression by merely listening to a patient's voice is nearly 70 percent, he added.

Earlier in 2018, tech firm Alpha Eye surprised the public by showing the pinpoint accuracy of its emotion recognition technology. In a TV program by China Central Television Station that year, the Alpha Eye product successfully distinguished the only real sniper from eight soldiers through observing their expressions, faster than a renowned psychologist did in the same program.

It sounds inconceivable to many that human emotions can be (accurately) recognized by some machines and programs that have no innate emotional intelligence. Ma explained that there are two main methods of emotion recognition at present, a non-contact one - by observing human facial expressions and behaviors; and a contact one - by a subject wearing a device on the head and having the brainwaves be monitored.

"The former one is more practical, and the latter one is more precise," Ma noted.

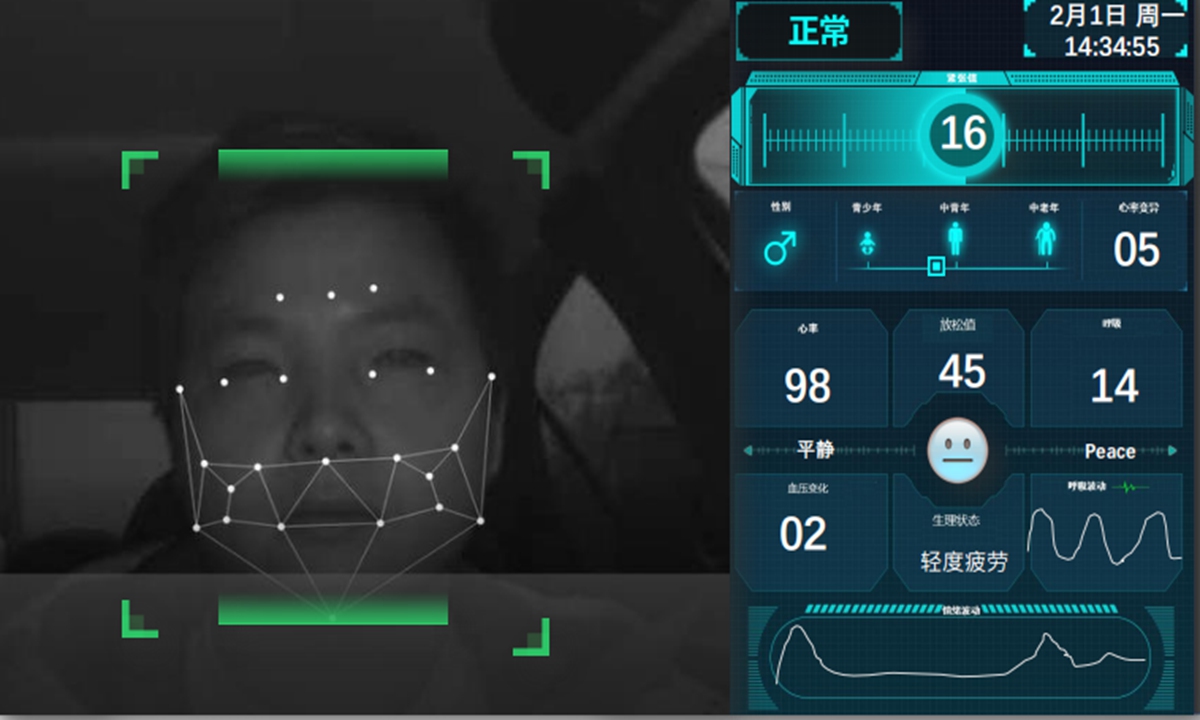

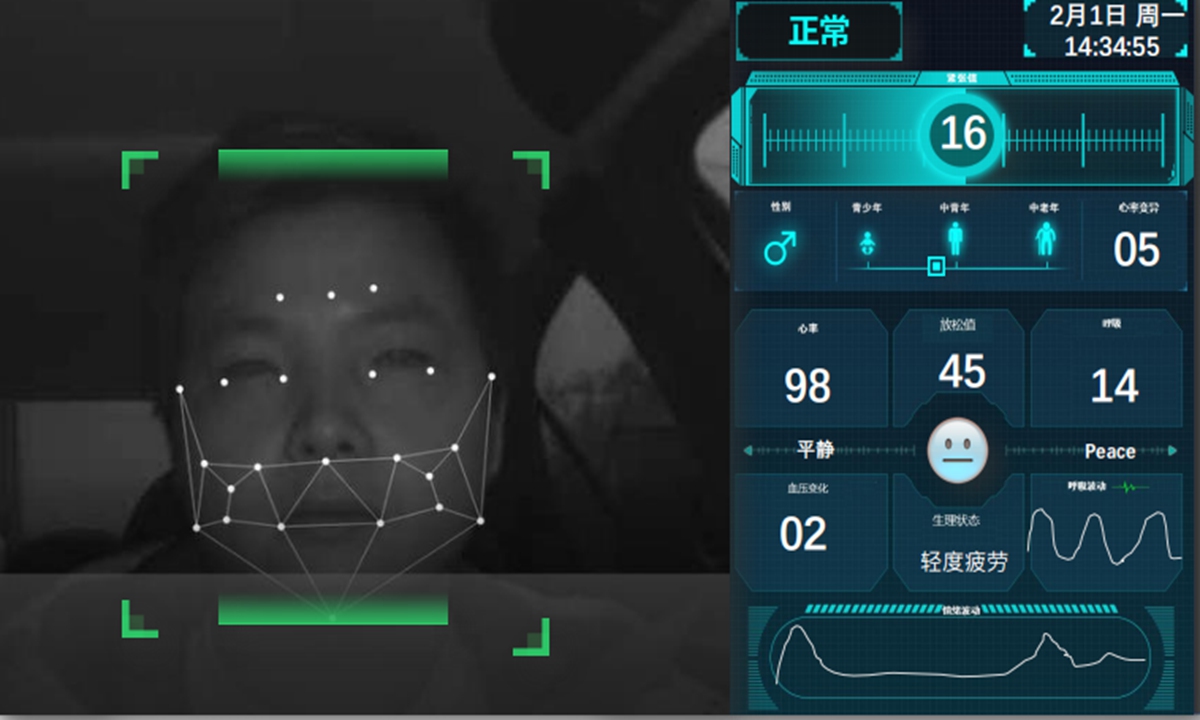

Either method is superior to manual recognition, which inevitably involves people's subjective judgments, said Shi Yuanqing, CEO of Cmcross, a Shenzhen-based emotion recognition and judgment tech development company.

"The current AI technology is able to capture physiological indicators that people cannot control, such as their subtle muscle tremors, heart rates, and blood pressure changes," Shi told the Global Times. "That makes the recognition process more accurate and objective."

The screenshot of the Cmcross' Driver Biometrics System

Wide use

With relatively high accuracy, emotion recognition technology has been used in various walks of life from detecting possible mental illness to interrogating criminal suspects, said domestic developers.

"Most overseas research teams are still focused on recognizing emotional outcomes such as happiness and sadness, but Chinese companies are breaking through the application fields of this technology by further analyzing the motivation behind people's emotions," Wei remarked.

In terms of road safety, the technology is expected to give early warnings before drivers have abnormal behaviors by monitoring their physical and psychological indicators, Shi told the Global Times.

Shi's company developed a Driver Biometrics System, which captures a driver's expressions, micro-movements and physiological characteristics through a camera mounted on the dashboard in front of the driver, and then analyzes his or her emotional and physiological reactions, such as drowsiness and psychological tension levels, through deep learning algorithms.

Shi mentioned an incident in which a bus driver in Southwest China's Guizhou Province intentionally crashed the bus into a local reservoir in July 2020, which killed 21. "More tragedies like this will be prevented from happening if we put emotion recognition tech into monitoring drivers' real-time status," he said.

Ma noted the important role this tech can play in maintaining social stability and protecting people's lives.

He referred to a shooting case that happened in the American city of Lakewood in 2009. In this case, ex-prisoner Maurice Clemmons fatally shot four police officers at a local coffee shop only one week after he was released from jail.

"It might not have happened if the jail had done a risk assessment on Clemmons, and postponed to release him after finding that he might have posed a threat to others' safety," Ma sighed.

Back in China, emotion recognition has contributed to the risk assessment of prisoners in a few regional prisons. In 2019, the criminal psychology research center under China University of Political Science and Law worked with a prison in Guangzhou, South China's Guangdong Province, in putting the "non-contact emotion recognition" tech into use in prison settings for the first time, Guangzhou Daily reported in November that year.

The technology helps prison officers to evaluate whether a prisoner presents potential risks, including possible mental problems and violent or suicidal tendencies, and estimate whether he or she is likely to repeat an offense after release, said Ma Ai, director of the center.

After a prisoner looks at the camera for three to four seconds, this recognition system can know his or her seven main physiological indexes including body temperature, eye movement, and heart rate, and convert them into psychological signs showing whether the prisoner is calm, depressed, angry or whatever else at that time, Ma Ai noted.

This technology is "the most advanced in the world," Ma Ai told the Global Times. At present, there are five to six prisons in China where this technology is in use, he added.

In the Western world, emotion recognition is mainly used in medical care and public security, and researchers from developed countries including the US, Germany, and Japan are also working on its tech development. This fast-growing technology had created a $20 billion-dollar industry globally, said The Guardian in its March 2019 coverage.

GT

Privacy concern

The mushrooming emotion recognition industry has also sparked controversy around the world on privacy protection. Some people are uncomfortable with their emotions being captured and "read" by AI technology, worrying about possible abuse and the leakage of personal information.

In India, a plan to monitor local women's expressions with facial recognition technology to prevent street harassment led to public fear for potential privacy violations, Reuters reported in January.

Similar to the facial recognition information, the leakage of emotion information may trigger a series of negative effects that threaten people's personal and property safety, said Huang Rihan, executive dean of the Beijing-based think tank Digital Economy. "Corresponding laws, therefore, must keep pace with the development of new technologies," he noted.

China's legal system, including its first-ever Civil Code and the Personal Information Protection Law of China (Draft), tries to improve citizens' privacy through regulating the collection and disclosure of personal information, Huang said.

"Authorities should also strengthen supervision and let the management run ahead of the technology development," he told the Global Times.

In terms of customer privacy protection, Wei pointed out that all the services provided by EmoKit Tech are based on the authorization of the clientele, ensuring that users are informed.

"All our partners are legitimate companies and organizations and the applications we jointly developed are legally compliant and in line with internationally accepted technical ethics," Wei said.

He added that the data sources are automatically identified through computerized AI algorithms, and company staffers do not have access to the raw data in most cases.

In recent years, China's fast-developing AI technology has often been smeared by some Western institutions and media, which politicize the technology to attack China, observers found.

The latest case of slander involved a report by the UK-based organization Article 19 published in January, which baselessly claimed that the emotion recognition tech in China has a "detrimental impact on human rights," despite this technology having been widely developed and used in many countries globally

Chinese industry insiders believe there is no need to demonize the emotion recognition technology. "The right to life is a fundamental human right," said Ma Qingguo. "In some cases, emotion recognition can figure out dangerous or violent persons and help prevent their wrongdoings, which helps save people's lives."

Emotion computing is not an evil "mind-reading" technique, Wei said. Whether it is used in financial anti-fraud, in judicial interrogation, or the more general fields of intelligent education and healthcare, "we Chinese companies strictly follow the principle of 'technology for good,' with the aim of better serving people and not letting lawbreakers get away with it," he told the Global Times.