Meta’s report on fake accounts reveals tip of iceberg of US ‘color revolution’ tactic

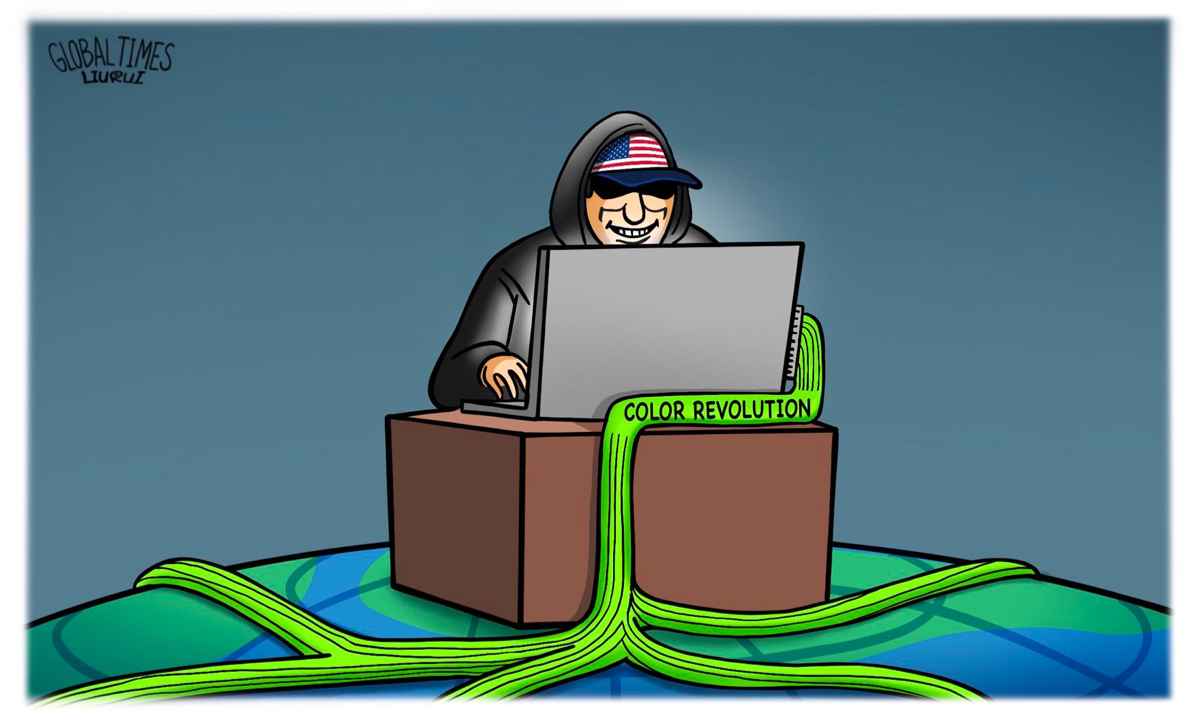

Illustration: Liu Rui/GT

Meta, the parent company of Facebook, Instagram, and WhatsApp, recently released a report revealing that people associated with the US military created fake accounts on more than seven internet services as part of a "coordinated inauthentic" influence operation targeting people in Central Asia and the Middle East. After the information was exposed, it aroused public attention.Specifically speaking, in 2011, British media outlet The Guardian disclosed that the US military's Sock Puppet software creates fake online identities in order to spread pro-American propaganda. At that time, Facebook which hadn't changed its name at that time, kept silent about it and the story didn't get much attention. This time, however, Meta’s report announced it had taken down 39 Facebook and 26 Instagram accounts that were part of a coordinated campaign focused on countries such as Afghanistan, Algeria, Iran, Iraq, Kazakhstan, Kyrgyzstan, Russia, Somalia, Syria, Tajikistan, Uzbekistan, and Yemen.

It is not difficult to find that the geographical distribution of these targets is highly consistent with the US national security strategy. The content involved is a classic "color revolution" type of content, such as postings on themes like sports or culture, emphasizing cooperation with the US and criticizing Iran, China, and Russia.

Meta said the campaign's overall impact did not appear to catch on in local communities. The majority of the operation's posts had little to no engagement from authentic communities. This implies that both the content's sender and interactor are most likely robot accounts. Such actions usually require funding, and performance evaluations are based on "actual results." Meta said that there is actually "little to no engagement from authentic communities." Then here comes the problem: whether the US’ traditional psychological warfare routines can still exert their expected effects in cyberspace may be a delicate issue.

The US military has always attached great importance to the strategic value of the internet, especially its "offensive use." Manipulation through the release of certain types of information can achieve the same effect as that which previously required the dispatch of special forces. This can also exempt the US government from taking various risks, so it has become one of the methods accepted and recognized by the US military. Combined with the actual performance of the US in promoting "color revolutions" around the world, there is reason to believe that, in the process of promoting such actions, the US not only formed a set of systematic tactics and methods but also created a complete ecosystem. Driven by economic interests, large-scale robot accounts have emerged, providing new support and impetus for the proliferation of false information on social media.

From the perspective of global cyberspace governance, the proliferation of false information and robot accounts, as well as the widespread emergence of ecological-level information processing, distortion, and manipulation, are undoubtedly a systemic disaster. It is thus surprising that the Meta company, which faced political pressure during the Donald Trump administration because of the "Russian agents" allegations, was able to release such a report this time.

Combined with the previous incident, Elon Musk's acquisition of Twitter was affected by the assessment of the number of false accounts. One can imagine the seriousness of such problems, which may have reached a level where companies cannot sit back and do nothing. In terms of overall quantity, there is reason to believe that what this report discloses is only the tip of the iceberg. This undoubtedly puts forward further requirements for promoting benign changes in global cyberspace governance and requires various stakeholders who are willing to undertake this to jointly assume their due responsibilities and make their due efforts to purify global social media platforms.

The author is director at the Research Center for Cyberspace Governance, Fudan University. opinion@globaltimes.com.cn