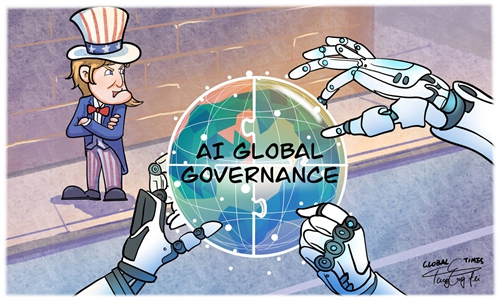

US new AI strategy aims to push open source domestically and closed source globally

Illustration: Chen Xia/GT

On Wednesday, the US government released its AI Action Plan, a strategic document aiming to secure the country's lead in what it calls "a fight that will define the 21st century." The plan lays out over 90 concrete recommendations, spanning technological innovation, application development and global rule-making in AI. Many observers view it as the current US administration's most significant policy directive in the AI field - one that may reshape the global economic and political landscape. Seen in the context of the US' broader AI strategy, the AI Action Plan is an "overt scheme with hidden agendas."Why can it be called an "overt scheme"? First, the formulation of this report has followed a highly public process. When the Trump administration took office, it inherited growing public concern following DeepSeek's global breakout. Fueled by a strong sense of urgency, Washington began to overhaul US AI policy. To "gather collective wisdom," the administration invited suggestions and feedback from the public. Industry experts, think tanks and tech giants also weighed in, and in April, the US government published over 10,000 public comments it received in response to the request. This was aimed at preparing the public for the introduction of the policy, so as to better forge a domestic consensus, especially in terms of the adjustment of the AI policy. Besides, there was also the intention to test the water for the established policy.

Second, long before the formal release of this plan on paper, the US had already begun executing its AI strategy in practice. Currently, Washington is accelerating efforts to shape and lead the global AI ecosystem. Its playbook is clear: On one hand, the US seeks to ease some chip-related restrictions to engage in investment and tariff negotiations with other countries - leveraging both technological superiority and policy tools to seize global market share. On the other hand, under the Stargate Initiative, it is expanding AI infrastructure and market layouts globally. In this sense, the AI Action Plan is more of a "final announcement."

But what are the "hidden agendas"? Beyond its expected emphases on AI development, some relatively "new" elements included are worthy of interest. For example, it recommends updating Federal procurement guidelines to "ensure that the government only contracts with frontier large language model developers who ensure that their systems are objective and free from top-down ideological bias," while claiming to evaluate whether Chinese AI models are under the Chinese government's so-called "censorship." This appears to be a classic case of deflecting attention and shifting blame.

The backdrop here is telling: AI chatbot Grok recently faced bans in some countries over racially offensive and politically inflammatory content - even insulting foreign leaders. These controversies challenge the Trump administration's AI deregulatory agenda and hamper the international rollout of American AI models. It is in this light that the US government responded to the issue of the so-called AI values in the AI Action Plan.

Another example is on paper, the plan appears to show the US' support for open access to models and weights, suggesting a commitment to transparency. But in practice, top US firms - like Meta and OpenAI - have been reluctant to open-source, citing "risks of foreign rivals catching up." Scrutinize closely and you'll see the US' push for open-source is not aimed at the global AI ecosystem, but rather designed to support access to large-scale computing power for its domestic startups and academia, as well as promoting the adoption of open-source models by small and medium-sized enterprises in the country.

It can be seen that in the future of the AI technology ecosystem, the US will take a more "pragmatic" strategy of pushing open source domestically and closed source globally to maintain its so-called technological leadership. However, the proposed AI Action Plan is one thing; actual implementation is another - and results will depend on a host of internal and external factors. Only time will tell how it will roll out.

The author is the director of the Institute of Sci-Tech and Cyber Security Studies at the China Institutes of Contemporary International Relations. opinion@globaltimes.com.cn